24 Jul 2012

For the past few months I’ve been a technical editor for a book my good friend and colleague, Doug Finke, is writing entitled PowerShell for Developers which has just recently become available on Amazon. The purpose of the book is to show how easy it is to accomplish normally mundane, repetitive, or clunky tasks with PowerShell, a simple, concise scripting language that you already have installed on your box.

On my current project, I was recently tasked with setting up the build for our project. Of course, build scripts aren’t exactly glorious or interesting in any way and the prospect of dealing with MSBuild’s XML files gives me a fleeting sense of vertigo.

But fortunately, there’s a much, much less painful way to make build scripts by using psake.

A Brief Introduction to psake

(If you already know psake, skip to the next section!)

psake (pronounced sah-kay) is a build scripting DSL of the make, rake, jake, and cake variety built up in PowerShell. Basically, you define tasks as small script blocks and can establish dependencies between them.

My projects tend to be organized like this:

+-MyApp\

+-docs\

+-src\

| +-MyApp.sln

+-lib\

+-tools\

| +-build\

| | +-psake.psm1

| | +-psake.ps1

| | +-default.ps1

+-psake.cmd

Here, psake.psm1, psake.ps1, and psake.cmd are from psake’s github repo, except that I modify psake.cmd to point to tools\build:

@echo off

if '%1'=='/?' goto help

if '%1'=='-help' goto help

if '%1'=='-h' goto help

powershell -NoProfile -ExecutionPolicy Bypass -Command "& '%~dp0\tools\build\psake.ps1' %*; if ($psake.build_success -eq $false) { exit 1 } else { exit 0 }"

goto :eof

:help

powershell -NoProfile -ExecutionPolicy Bypass -Command "& '%~dp0\tools\build\psake.ps1' -help"

My basic default.ps1 psake script looks like this:

properties {

# Since we're in tools\build, root is up two dirs.

$rootDir = (Resolve-Path (Join-Path $psake.build_script_dir '..\..')).Path

$srcDir = Join-Path $rootDir src

$slnPath = Join-Path $srcDir PsakeDemo.sln

$buildProperties = @{}

if(-not $buildType) {

$buildType = 'Debug'

}

$buildProperties.Configuration = "$buildType|AnyCPU"

}

task default -depends Clean, Compile, Test

task Clean {

Invoke-MSBuild $slnPath 'Clean' $buildProperties

}

task Compile -depends Clean {

Invoke-MSBuild $slnPath 'Build' $buildProperties

}

task Test -depends Compile {

# TODO: call test framework CLI inside an `exec { ... }`

}

function Invoke-MSBuild([string]$Path, [string]$Target, [Hashtable]$Properties) {

Write-Host "Invoking MSBuild" -Foreground Blue

Write-Host @"

Path : $Path

Target : $Target

Properties : $($Properties | Out-String)

"@

$props = ($Properties.GetEnumerator() | % { $_.Key + '=' + $_.Value }) -join ';'

exec { msbuild $Path "/t:$Target" "/p:$props" }

}

What’s great about psake is that there are really only three things you need to learn.

The first is the properties block up at the top. This guy is responsible for setting up your global state and is a good place to declare a bunch of variables that make working with your script easy to understand. As you can see, I like to declare variables for every directory path that might be needed as well as the .sln path. My convention here is that paths to directories are suffixed with “Dir”, paths to files are suffixed with “Path”, and all paths are always absolute. In the rare case of a need for a relative path, I would suffix the variable with “RelDir” or “RelPath” so the next guy who looks at my script knows what’s going on.

The second is task. This guy is the workhorse of our script; each stage of the build process should be encapsulated in a task so that we can mix and match them later on. Each task exposes out a list of other tasks that need to be done first. For instance, it’d be really problematic to run your tests before you’ve compiled! By adding the -depends comma-separated list of other task names, you can be sure that those tasks will be run before yours is executed, even if they’re not specified explicitly. You might also notice that I’ve defined a default task. This is essentially the Main method of our build script. While your script doesn’t have to have one, you should make one so your script can be run without extra parameters. As you can see, I don’t define any work to be done in the default task, I just define what tasks should be done by default. Simple. (By the way, I could technically have just said task default -depends Test and that would have accomplished the same thing since Test depnds on Compile and Compile depends on Clean. I specify all three just to state my intentions.)

Finally, you need to know about exec { ... }. This guy runs some external process (like msbuild or your test runner) inside the braces, checks the exit code and throws an error if it doesn’t return 0. Handy!

New, at the bottom of my script I’ve added a function called Invoke-MSBuild. What I like about this is that now I have a nice PowerShell API for kicking off msbuild. If I need more switches, I can just add more parameters to the function. Plus, working with a Hashtable to set msbuild properties is so much easier than trying to construct key1=value1;key2=value2.

Templating CommonAssemblyInfo.cs

In my src directory, I like to create two files and add them to my soltuion files: CommonAssemblyInfo.cs and CommonAssemblyInfo.cs.template, the idea being that the .cs file is the one that I reference in my projects (be sure to use “add as link“) and the .cs.template file is the file that PowerShell reads teo create it. Let’s take a look at the template.

using System.Reflection;

using System.Runtime.InteropServices;

[assembly: AssemblyConfiguration("$buildType")]

[assembly: AssemblyCompany("North Horizon")]

[assembly: AssemblyProduct("PsakeDemo")]

[assembly: AssemblyCopyright("Copyright © Daniel Moore $((Get-Date).Year)")]

[assembly: ComVisible(false)]

[assembly: AssemblyVersion("$version")]

[assembly: AssemblyFileVersion("$version")]

First off, there’s no doubt how to read this file. It is C#. Well, kinda. But the point is that anybody can read this file and make small changes as necessary, even if they didn’t know PowerShell or psake. Quite clearly we’re using PowerShell variables throughout ($buildType and $version) and, amazingly, we’ve got an honest-to-goodness PowerShell command in play: $((Get-Date).Year). So this must require a couple KLoC framework, to do the parsing and such, yeah? Actually, it’s more like two:

properties {

# ...

$version = '1.0.0.0' # TODO: obtain version from build server or something.

# ...

}

task Version {

$templateName = 'CommonAssemblyInfo.cs.template'

$templatePath = Join-Path $srcDir $templateName

$outPath = Join-Path $srcDir ([IO.Path]::GetFileNameWithoutExtension($templateName))

# <1>

$content = [IO.File]::ReadAllText($templatePath, [Text.Encoding]::Default)

# <2>

Invoke-Expression "@`"`r`n$content`r`n`"@" | Out-File $outPath -Encoding utf8 -Force

}

I actually learned this technique while editing PowerShell for Developers – this is just one small example from chapter 4, which goes into much greater depth about the awesome stuff you can do with templating.

So what does this do? Well, on mark <1>, we simply read in all the text from our template as a string using the tried-and-true File .NET API. I specify the encoding here so the copyright symbol gets read correctly.

On mark <2>, we wrap that string into a double-quote herdoc and invoke the whole thing as a script. This is how we leverage the entire PowerShell ecosystem with string interpolation and sub-expressions with almost no code.

This is, of course, just the begining – but that’s what makes PowerShell so awesome. With a few lines of code, you can leverage the whole of .NET in an intuitive and concise manner, and you’re not limited to just things the PowerShell team has thought of. PowerShell’s out-of-the-box cmdlet library makes common and often tough tasks easy, but the language provides all the building blocks you need to build anything you can imagine.

If you’re looking to get started in PowerShell or want to expand your toolbox of patterns and techniques, I can’t recommend enough that you take a look at PowerShell for Developers and see what else you can start automating.

05 Jun 2012

When I explain commands in WPF, I tend to say they are simply “bindable methods.” I like this definition not only for its brevity, but for its composition of powerful ideas into a single construct. It reinforces the axiom of “no code left behind” that we push so hard in WPF. But this leaves behind almost two thirds of the functionality given to us by ICommand the parameter and CanExecute. Parameters, admittedly, are needed less often in most designs, as commands tend to operate on the view model to which they are attached. CanExecute, on the other hand, is used fairly often and is also fairly broken in most implementations.

On my last project, I had a teammate who was learning WPF and was confused why a button bound to a command was not becoming enabled after its CanExecute conditions became true. Our implementation of ICommand relied on CommandManager.RequerySuggested to raise the ICommand.CanExecuteChanged event and therein lied the problem. Here’s what MSDN says about RequerySuggested:

Occurs when the CommandManager detects conditions that might change the ability of a command to execute.

Hm, well that’s a little vague for my taste. What conditions? And might change? So, after a little reflection, I found that the internal class System.Windows.Input.CommandDevice is responsible for raising RequerySuggested based on UI events. Here is the relevant method:

private void PostProcessInput(object sender, ProcessInputEventArgs e)

{

if (e.StagingItem.Input.RoutedEvent == InputManager.InputReportEvent)

{

// abridged

}

else if (

e.StagingItem.Input.RoutedEvent == Keyboard.KeyUpEvent ||

e.StagingItem.Input.RoutedEvent == Mouse.MouseUpEvent ||

e.StagingItem.Input.RoutedEvent == Keyboard.GotKeyboardFocusEvent ||

e.StagingItem.Input.RoutedEvent == Keyboard.LostKeyboardFocusEvent)

{

CommandManager.InvalidateRequerySuggested();

}

}

As you can see, this is really a poor way to detect if CanExecute has changed. In many circumstances, view model state changes are based on far more than mouse and keyboard events. In fact, when Microsoft Patterns & Practices made their DelegateCommand, they avoided RequerySuggested for just this reason.

So I advised my colleague to create a normal bindable property called CanFooCommandExecute and bind the IsEnabled property of the button to that. I think he ended up calling CommandManager.InvalidateRequerySuggested himself to limit the amount of rework needed to be done, but that seems far from ideal to me.

This got me thinking, though: why not leverage a bindable property to enable and disable commands? Or, even better, why not define the conditions of a command being enabled in terms of bindable properties?

Fortunately, we have everything we need already to accomplish this. What we can do is instead of taking a Func<bool> for our CanExecute delegate, we’ll take an Expression<Func<bool>>. Then we can look at the AST given to us and find any member accesses with an INotifyPropertyChanged root. While we’re at it, we could also look for method calls to INotifyCollectionChanged and IBindingList objects, since they tell us when their items change.

I have devised a few acceptance tests that demonstrate this behavior. First, let’s define a view model:

class TestViewModel : BindableBase

{

public TestViewModel()

{

SimpleCommand = new ExpressionCommand(OnExecute, () => Value1 > 5);

}

public ICommand SimpleCommand { get; private set; }

private int _value1;

public int Value1

{

get { return _value1; }

set { SetProperty(ref _value1, value, "Value1"); }

}

private void OnExecute() { }

}

And let’s just test the basic behavior of the command.

[Test]

public void TestSimpleCommand()

{

var sut = new TestViewModel();

int updateCount = 0;

sut.SimpleCommand.CanExecuteChanged += (s, e) => updateCount++;

sut.Value1 = 3;

updateCount.ShouldEqual(1);

}

And there it is. No extra management of CanExecute, just the syntax you’re probably used to already with DelegateCommand Except this time, it works the way you think it should.

Now lets try out a property chain and an ObservableCollection. Here are our view models:

private class TestViewModel : BindableBase

{

public TestViewModel()

{

ComplexCommand = new ExpressionCommand(OnExecute, () => Value2.Value4.Value3 > 5);

ComplexCollectionCommand = new ExpressionCommand(OnExecute, () => Value2.Value5.Contains(Value2));

}

public ICommand ComplexCommand { get; private set; }

public ICommand ComplexCollectionCommand { get; private set; }

private int _value1;

public int Value1

{

get { return _value1; }

set { SetProperty(ref _value1, value, "Value1"); }

}

private TestSubViewModel _value2;

public TestSubViewModel Value2

{

get { return _value2; }

set { SetProperty(ref _value2, value, "Value2"); }

}

private void OnExecute() { }

}

private class TestSubViewModel : BindableBase

{

private int _value3;

public int Value3

{

get { return _value3; }

set { SetProperty(ref _value3, value, "Value3"); }

}

private TestSubViewModel _value4;

public TestSubViewModel Value4

{

get { return _value4; }

set { SetProperty(ref _value4, value, "Value4"); }

}

public ObservableCollection<TestSubViewModel> Value5 = new ObservableCollection<TestSubViewModel>();

}

And the tests:

[Test]

public void TestComplexCommand()

{

var sut = new TestViewModel

{

Value2 = new TestSubViewModel()

};

int updateCount = 0;

sut.ComplexCommand.CanExecuteChanged += (s, e) => updateCount++;

sut.Value2 = new TestSubViewModel();

updateCount.ShouldEqual(1);

sut.Value2.Value4 = new TestSubViewModel();

updateCount.ShouldEqual(2);

sut.Value2.Value4.Value3 = 3;

updateCount.ShouldEqual(3);

}

[Test]

public void TestComplexCollectionCommand()

{

var sut = new TestViewModel

{

Value2 = new TestSubViewModel()

};

int updateCount = 0;

sut.ComplexCollectionCommand.CanExecuteChanged += (s, e) => updateCount++;

sut.Value2 = new TestSubViewModel();

updateCount.ShouldEqual(1);

sut.Value2.Value5.Add(sut.Value2);

updateCount.ShouldEqual(2);

}

Even when complex properties are completely reassigned, ExpressionCommand knows to resubscribe to the whole tree again.

I won’t get into the implementation of ExpressionCommand in this article as it’s pretty heavy into LINQ expressions, but I have added all the code into my InpcTemplate on GitHub.

10 Nov 2011

State is a tricky thing in RX, especially when we have more than one subscriber to a stream. Consider a fairly innocuous setup:

var interval = Observable.Interval(TimeSpan.FromMilliseconds(500));

interval.Subscribe(i => Console.WriteLine("First: {0}", i));

interval.Subscribe(i => Console.WriteLine("Second: {0}", i));

At first glance it might look like I’m setting up two listeners to a single interval pulse, but what’s actually happening is that each time I call Subscribe, I’ve created a new timer to tick values. Imagine how bad this could be if instead of an interval I was, say, sending a message across the network and waiting for a response.

There are a few ways to solve this problem, but only one of them is actually correct. The first thing most people latch on to in Intellisense is Publish(). Now that method looks useful. So I might try:

var interval = Observable.Interval(TimeSpan.FromMilliseconds(500)).Publish();

interval.Subscribe(i => Console.WriteLine("First: {0}", i));

interval.Subscribe(i => Console.WriteLine("Second: {0}", i));

Now I get nothing at all. Great advertising there.

So what is actually happening here? Well, for one you might notice that interval is no longer IObservable<long> but is now an IConnectableObservable<long>, which extends IObservable with a single method: IDisposable Connect().

As it turns out, Publish is simply a convenience method for Multicast that supplies the parameter for you. Specifically, calling stream.Publish() is exactly the same as calling stream.Multicast(new Subject<T>()). Wait, a subject?

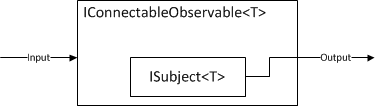

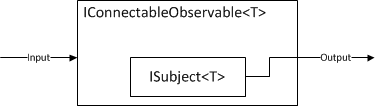

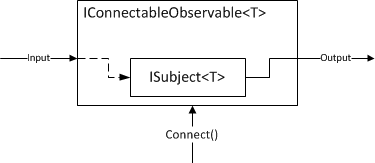

What Multicast does is create a concrete implementation of IConnectableObservable<T> to wrap the subject we give it and forwards the Subscribe method of the IConnectableObservable<T> to Subject<T>.Subscribe, so it looks something like this:

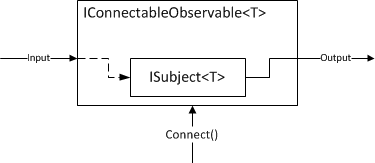

You might have noticed that the input doesn’t go anywhere. That’s exactly why our simple call to Publish() earlier didn’t produce any results at all – IConnectableObservable<T> hadn’t been fully wired up yet. To do that, we need to make a call to Connect(), which will subscribe our input into our subject.

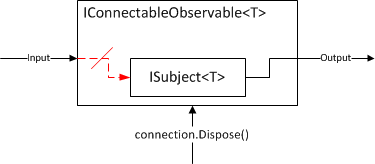

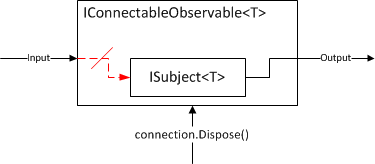

Connect() returns to us an IDisposable which we can use to cut off the input again. Keep in mind the downstream observers have no idea any of this is happening. When we disconnect, OnCompleted will not be fired.

Getting back to my example, the correct code looks like this:

var interval = Observable.Interval(TimeSpan.FromMilliseconds(500)).Publish();

interval.Subscribe(i => Console.WriteLine("First: {0}", i));

interval.Subscribe(i => Console.WriteLine("Second: {0}", i));

var connection = interval.Connect();

// Later

connection.Dispose();

It is very important to make sure all of your subscribers are setup before you call Connect(). You can think of Publish (or, really, Multicast) like a valve on a pipe. You want to be sure you have all your pipes together and sealed before you open it up, otherwise you’ll have a mess.

A problem that comes up fairly often is what do you do when you do not control the number or timing of subscriptions? For instance, if I have a stream of USD/EUR market rates, there’s no need for me to keep that stream open if nobody is listening, but if someone is, I’d like to share that connection, rather than create a new one.

This is where RefCount() comes in. RefCount() takes an IConnectableObservable<T> and returns an IObservable<Tg>, but with a twist. When RefCount gets its first subscriber, it automatically calls Connect() for you and keeps the connection open as long as anyone is listening; once the last subscriber disconnects, it will call Dispose on its connection token.

So now you might be wondering why I didn’t use RefCount() in my so-called “correct” implementation. I wouldn’t have had to call either Connect() or Dispose, and less is more, right? All that is true, but it omits the cost of safety. Once I dispose my connection, my source no longer has an object reference to my object, which allows the GC to do what it does best. Often, these streams start to make their way outside of my class, which can create a long dependency chain of object references. That’s fine, but if I dispose an object in the middle, I want to make sure that that object is now ready for collection, and if I RefCount(), I simply can’t make that assertion, because I’d have to ensure every downstream subscriber had also disposed.

Another scenario that comes up is how to keep a record of things you’ve already received. For instance, I might make a call to find all tweets with the hashtag “#RxNet” with live updates. If I subscribe second observer, I might expect that all the previously found data to be sent again without making a new request to the server. Fortunately, we have Replay() for this. It literally has 15 overloads, which cover just about every permutation of windowing by count and/or time, and supplying an optional scheduler and/or selector. The parameterless call, however, just remembers everything. Replay is just like Publish in the sense that it also forwards a call to Multicast, but this time with a ReplaySubject<T>.

Now the temptation is to combine Replay() and RefCount to make caches of things for subscribers when they are needed. Lets look at my Twitter example.

tweetService.FindByHashTag("RxNet").Replay().RefCount()

When the first observer subscribes, FindByHashTag will make a call (I assume this is deferred) to the network and start streaming data, and all is well. When the second observer subscribes, he gets all the previously found data and updates. Great! Now, let’s say both unsubscribe and a third observer then subscribes. He’s going to get all that previous data, and then the deferred call in FindByHashTag is going to be re-triggered and provide results that we might have already received from the replay cache! Instead, we should implement a caching solution that actually does what we expect and wrap it in an Observable.Create, or if we expect only fresh tweets, use Publish().RefCount() instead.

16 Sep 2011

There’s been no shortage of news and non-news coming out of Build Conference this week, and I suspect there will be continue to be no shortage of the same for the next few months. We’ve learned that WPF isn’t quite dead, Silverlight isn’t quite dead, but both are in the process of being “reimagined,” whatever that means. Metro is clearly the first step of that process and it provokes a very diametric response from developers.

I sat down to breakfast this morning with a couple guys I hadn’t met. Since Build Conference was an incorporation of both ISVs and IHVs, I greeted them with, “Software or hardware?” The man across the table told me he was involved with software, while the guy to my right ignored me entirely. Without quite playing 20 Questions, I found that the more vocal one was a client side developer in health care. He told me that he was very excited about the new Metro design and especially for the upcoming Windows 8 tablets. “Right now, everything is moving to Electronic Medical Records. So, when a doctor goes into see a patient, the first thing he does is turn his back on that patient and bring up the records on a desktop. A tablet is much more like the clipboards doctors used to use.” And, of course, Metro plays a huge role in the realization of that use case; a friendly, full-screen touch-accelerated app is intrinsic to the paradigm of a tablet. Not only that, but ease of access to peripherals, especially the webcam, make Windows Runtime (WinRT) a compelling API. As an added bonus, he pointed out that he could transition his current ASP.NET development stack and programmers much more easily to use either C# and XAML or even HTML5 and JavaScript to produce a Metro app than to use Coco and Objective-C to make an iPad app. Without a doubt, this is what Microsoft had envisioned.

At the opposite end of the spectrum, I met a couple developers that maintain an institutional client-facing application written in MFC. “There’s over three hundred screens in our application. No way can we fit that into Metro.” He went on further to explain that their application opens direct connections to a SQL Server instances located on both the client and server tiers. “I have to figure out how to go back and tell my boss that there’s just no way we can move over to Metro.”

I don’t know if he was aware or not of the seemingly obvious flaws in his app’s design, or that Microsoft was encouraging developers to forsake that all-too-familiar design of MFC apps that he showed me. “It’d be a complete rewrite;” that much we completely agreed upon.

There is a continuing consensus around the notion that WinRT is by far the most ambitious thing Microsoft has done in decades. Perhaps in a lot of ways, Windows XP is an apt analog of Win32 programming: it’s been around for so long, it’s in use in so many places, and it’s so well known, that it will take a very, very long time to unwind from it. Windows 8 and WinRT have enormous potential to revitalize the failing Windows desktop software market. All that remains to be seen is whether developers choose to capitalize upon it or cut their losses and move on.

06 Aug 2011

I’ve been using Powershell for just over a year now, and its effect on my development workflow has been steadily increasing. Looking back, I have no doubt that it is the most important tool in my belt – to be perfectly honest, I’d rather have Powershell than Visual Studio now. Of course, that’s not to say Visual Studio isn’t useful – it is – but rather more that Poweshell fills an important role in the development process that isn’t even approached by other tools on the platform. Visual Studio may be the best IDE on the market, but at the end of the day, there are other tools that can replace it, albeit imperfectly.

To take some shavings off of the top top of the iceberg that is why I use Powershell, I’d like to share a recent experience of Powershell delivering for me.

Setup for Failure

As a co-orgranizer for the New York .NET Meetup I was tasked with getting the list of attendees off of meetup.com and over to the lovely people at Microsoft who kindly give us space to meet. Now, you might think there’d be some nifty “export attendee list to CSV” function the meetup.com website, but like a lot of software, it doesn’t do half the things you think it should and this is one of those things. Usually my colleague David assembles the list, but this particular month he was out on vacation. He did, however, point me over to a GitHub repository of a tool that would do the extraction.

Following his advice, I grabbed the repository and brought up the C#/WinForms solution in Visual Studio. Looking at the project structure, I was a bit stunned at the scale of it all. The author had divided his concerns very well into UI, data access, the core infrastructure, and, of course, unit testing. I thought that was pretty peculiar considering all I wanted to do was get a CSV of names and such off of a website. Far be it for me to criticize another developer’s fastidiousness. Maybe it also launched space shuttles; you never know.

In what I can only now describe as naivety, I hit F5 and expected something to happen.

I was rewarded with 76 errors.

Right off the bat, I realized that the author had botched a commit and forgot a bunch of libraries. I was able to find NHibernate fairly easily with Nuget, but had no luck with “Rhino.Commons.NHibernate”. I tried to remove the dependency problem, but didn’t have much luck. And the whole time I was wondering why the hell you needed all these libraries to extract a damn CSV from the internet.

The Problem

Rather than throw more time after the problem, I decided to forge out on my own. Really, how hard could it be to

- Get an XML doc from the internet

- Extract the useful data

- Perform some heuristics on the names

- Dump a CSV file

Being a long-time C# programmer, my knee-jerk reaction was to build a solution in that technology. Forgoing a GUI to spend as little time as possible in building this, I’d probably build a single file design that ostensibly could consist of a single method. And if that were the case, why not script it?

So if I was going to write a script, what to use? I could write a JavaScript and run it on node.js, but it’s lacking proper CSV utilities and I’d have to run it on something other than my main Windows box. Not to mention I don’t particularly writing in JavaScript, so I’d probably write it in CoffeeScript and have to compile it, etc, etc.

I briefly considered writing an F# script, but I suspect only about ten people would know what on earth it was, and, at the end of the day, I would like to share my script to others.

The Solution

In the end, I concluded what I had known already: Powershell was the tool to use. It had excellent support for dealing with XML (via accelerators) and, as a real scripting language, had no pomp and circumstance.

Here’s the script I ended up writing:

function Get-MeetupRsvps([string]$eventId, [string]$apiKey) {

$nameWord = "[\w-']{2,}"

$regex = "^(?'first'$nameWord) ((\w\.?|($nameWord )+) )?(?'last'$nameWord)|(?'last'$nameWord), ?(?'first'$nameWord)( \w\.|( $nameWord)+)?$"

function Get-AttendeeInfo {

process {

$matches = $null

$answer = $_.answers.answers_item

if(-not ($_.name -match $regex)) { $answer -match $regex | Out-Null }

return New-Object PSObject -Property @{

'FirstName' = $matches.first

'LastName' = $matches.last

'RSVPName' = $_.name

'RSVPAnswer' = $answer

'RSVPGuests' = $_.guests

}

}

}

$xml = [Xml](New-Object Net.WebClient).DownloadString("https://api.meetup.com/rsvps.xml?event_id=$eventId`&key=$apiKey")

$xml.SelectNodes('/results/items/item[response="yes"]') `

| Get-AttendeeInfo `

| select FirstName, LastName, RSVPName, RSVPAnswer, RSVPGuests

}

To dump this to a CSV file is then really easy:

Get-MeetupRsvps -EventId 1234 -ApiKey 'MyApiKey' | Export-Csv -Path rsvps.csv -NoTypeInformation

And because of this design, it’s really extensible. Potentially, instead of exporting to a CSV, you could pipe the information into another processor that would remove anyone named “Thorsten.” Actually, that would look like this:

Get-MeetupRsvps -EventId 1234 -ApiKey 'MyApiKey' `

| ? { %_.FirstName -ne 'Thorsten' } `

| Export-Csv -Path rsvps.csv -NoTypeInformation

It’d be pretty difficult to do that if I’d written a C# executable – you’d have to go into Excel to do that. Or write a Powershell script. Just saying.

Here’s the real kicker: I spent all of five minutes writing my Powershell script and then spent minutes tweaking my regex to identify as many names as possible. I didn’t need to recompile, just F5 again in Poweshell ISE, which you have installed already if you’re on Windows 7. Since I left the Export-Csv part off during debugging, I could just read the console output and see what I got.

When I was happy with my output, it was dead simple to distribute: throw it in a GitHub Gist and move on with my life. If you decide to use it, all you need is Powershell installed (again, are you on Windows 7?) and the ability to copy and paste. No libraries. No worries. If you don’t like my regex, it couldn’t be easier to figure out how to replace it. If you want more fields, it’s easy to see where they should be added.

It’s really just that easy.